43 9.4 Measurement quality

Learning Objectives

- Define reliability and describe the types of reliability

- Define validity and describe the types of validity

- Analyze the rigor of qualitative measurement using the criteria of trustworthiness and authenticity

In quantitative research, once we’ve managed to define our terms and specify the operations for measuring them, how do we know that our measures are any good? Without some assurance of the quality of our measures, we cannot be certain that our findings have any meaning or, at the least, that our findings mean what we think they mean. When social scientists measure concepts, they aim to achieve reliability and validity in their measures. These two aspects of measurement quality are the focus of this section. We’ll consider reliability first and then take a look at validity. For both aspects of measurement quality, let’s say our interest is in measuring the concepts of alcoholism and alcohol intake. What are some potential problems that could arise when attempting to measure this concept, and how might we work to overcome those problems?

Reliability

First, let’s say we’ve decided to measure alcoholism by asking people to respond to the following question: Have you ever had a problem with alcohol? If we measure alcoholism in this way, it seems likely that anyone who identifies as an alcoholic would respond with a yes to the question. So, this must be a good way to identify our group of interest, right? Well, maybe. Think about how you or others you know would respond to this question. Would responses differ after a wild night out from what they would have been the day before? Might an infrequent drinker’s current headache from the single glass of wine she had last night influence how she answers the question this morning? How would that same person respond to the question before consuming the wine? In each of these cases, if the same person would respond differently to the same question at different points, it is possible that our measure of alcoholism has a reliability problem. Reliability in measurement is about consistency.

One common problem of reliability with social scientific measures is memory. If we ask research participants to recall some aspect of their own past behavior, we should try to make the recollection process as simple and straightforward for them as possible. Sticking with the topic of alcohol intake, if we ask respondents how much wine, beer, and liquor they’ve consumed each day over the course of the past 3 months, how likely are we to get accurate responses? Unless a person keeps a journal documenting their intake, there will very likely be some inaccuracies in their responses. If, on the other hand, we ask a person how many drinks of any kind they have consumed in the past week, we might get a more accurate set of responses.

Reliability can be an issue even when we’re not reliant on others to accurately report their behaviors. Perhaps a researcher is interested in observing how alcohol intake influences interactions in public locations. She may decide to conduct observations at a local pub, noting how many drinks patrons consume and how their behavior changes as their intake changes. But what if the researcher has to use the restroom and misses the three shots of tequila that the person next to her downs during the brief period she is away? The reliability of this researcher’s measure of alcohol intake, counting numbers of drinks she observes patrons consume, depends on her ability to actually observe every instance of patrons consuming drinks. If she is unlikely to be able to observe every such instance, then perhaps her mechanism for measuring this concept is not reliable.

If a measure is reliable, it means that if the measure is given multiple times, the results will be consistent each time. For example, if you took the SATs on multiple occasions before coming to school, your scores should be relatively the same from test to test. This is what is known as test-retest reliability. In the same way, if a person is clinically depressed, a depression scale should give similar (though not necessarily identical) results today that it does two days from now.

Additionally, if your study involves observing people’s behaviors, for example watching sessions of mothers playing with infants, you may also need to assess inter-rater reliability. Inter-rater reliability is the degree to which different observers agree on what happened. Did you miss when the infant offered an object to the mother and the mother dismissed it? Did the other person rating miss that event? Do you both similarly rate the parent’s engagement with the child? Again, scores of multiple observers should be consistent, though perhaps not perfectly identical.

Finally, for scales, internal consistency reliability is an important concept. The scores on each question of a scale should be correlated with each other, as they all measure parts of the same concept. Think about a scale of depression, like Beck’s Depression Inventory. A person who is depressed would score highly on most of the measures, but there would be some variation. If we gave a group of people that scale, we would imagine there should be a correlation between scores on, for example, mood disturbance and lack of enjoyment. They aren’t the same concept, but they are related. So, there should be a mathematical relationship between them. A specific statistical test known as Cronbach’s Alpha provides a way to measure how well each question of a scale is related to the others.

Test-retest, inter-rater, and internal consistency are three important subtypes of reliability. Researchers use these types of reliability to make sure their measures are consistently measuring the concepts in their research questions.

Validity

While reliability is about consistency, validity is about accuracy. What image comes to mind for you when you hear the word alcoholic? Are you certain that the image you conjure up is similar to the image others have in mind? If not, then we may be facing a problem of validity.

For a measure to have validity, we must be certain that our measures accurately get at the meaning of our concepts. Think back to the first possible measure of alcoholism we considered in the previous few paragraphs. There, we initially considered measuring alcoholism by asking research participants the following question: Have you ever had a problem with alcohol? We realized that this might not be the most reliable way of measuring alcoholism because the same person’s response might vary dramatically depending on how they are feeling that day. Likewise, this measure of alcoholism is not particularly valid. What is “a problem” with alcohol? For some, it might be having had a single regrettable or embarrassing moment that resulted from consuming too much. For others, the threshold for “problem” might be different; perhaps a person has had numerous embarrassing drunken moments but still gets out of bed for work every day, so they don’t perceive themselves as having a problem. Because what each respondent considers to be problematic could vary so dramatically, our measure of alcoholism isn’t likely to yield any useful or meaningful results if our aim is to objectively understand, say, how many of our research participants are alcoholics. [1]

In the last paragraph, critical engagement with our measure for alcoholism “Do you have a problem with alcohol?” was shown to be flawed. We assessed its face validity or whether it is plausible that the question measures what it intends to measure. Face validity is a subjective process. Sometimes face validity is easy, as a question about height wouldn’t have anything to do with alcoholism. Other times, face validity can be more difficult to assess. Let’s consider another example.

Perhaps we’re interested in learning about a person’s dedication to healthy living. Most of us would probably agree that engaging in regular exercise is a sign of healthy living, so we could measure healthy living by counting the number of times per week that a person visits their local gym. But perhaps they visit the gym to use their tanning beds or to flirt with potential dates or sit in the sauna. These activities, while potentially relaxing, are probably not the best indicators of healthy living. Therefore, recording the number of times a person visits the gym may not be the most valid way to measure their dedication to healthy living.

Another problem with this measure of healthy living is that it is incomplete. Content validity assesses for whether the measure includes all of the possible meanings of the concept. Think back to the previous section on multidimensional variables. Healthy living seems like a multidimensional concept that might need an index, scale, or typology to measure it completely. Our one question on gym attendance doesn’t cover all aspects of healthy living. Once you have created one, or found one in the existing literature, you need to assess for content validity. Are there other aspects of healthy living that aren’t included in your measure?

Let’s say you have created (or found) a good scale, index, or typology for your measure of healthy living. A valid measure of healthy living would be able to predict, for example, scores of a blood panel test during their annual physical. This is called predictive validity, and it means that your measure predicts things it should be able to predict. In this case, I assume that if you have a healthy lifestyle, a standard blood test done a few months later during an annual checkup would show healthy results. If we were to administer the blood panel measure at the same time as you administer your scale of healthy living, we would be assessing concurrent validity. Concurrent validity is the same as predictive validity—the scores on your measure should be similar to an established measure—except that both measures are given at the same time.

Another closely related concept is convergent validity. In assessing for convergent validity, one should look for existing measures of the same concept, for example the Healthy Lifestyle Behaviors Scale (HLBS). If you give someone your scale and the HLBS at the same time, their scores should be pretty similar. Convergent validity takes an existing measure of the same concept and compares your measure to it. If their scores are similar, then it’s probably likely that they are both measuring the same concept. Discriminant validity is a similar concept, except you would be comparing your measure to one that is entirely unrelated. A participant’s scores on your healthy lifestyle measure shouldn’t be statistically correlated with a scale that measures knowledge of the Italian language.

These are the basic subtypes of validity, though there are certainly others you can read more about. One way to think of validity is to think of it as you would a portrait. Some portraits of people look just like the actual person they are intended to represent. But other representations of people’s images, such as caricatures and stick drawings, are not nearly as accurate. While a portrait may not be an exact representation of how a person looks, what’s important is the extent to which it approximates the look of the person it is intended to represent. The same goes for validity in measures. No measure is exact, but some measures are more accurate than others.

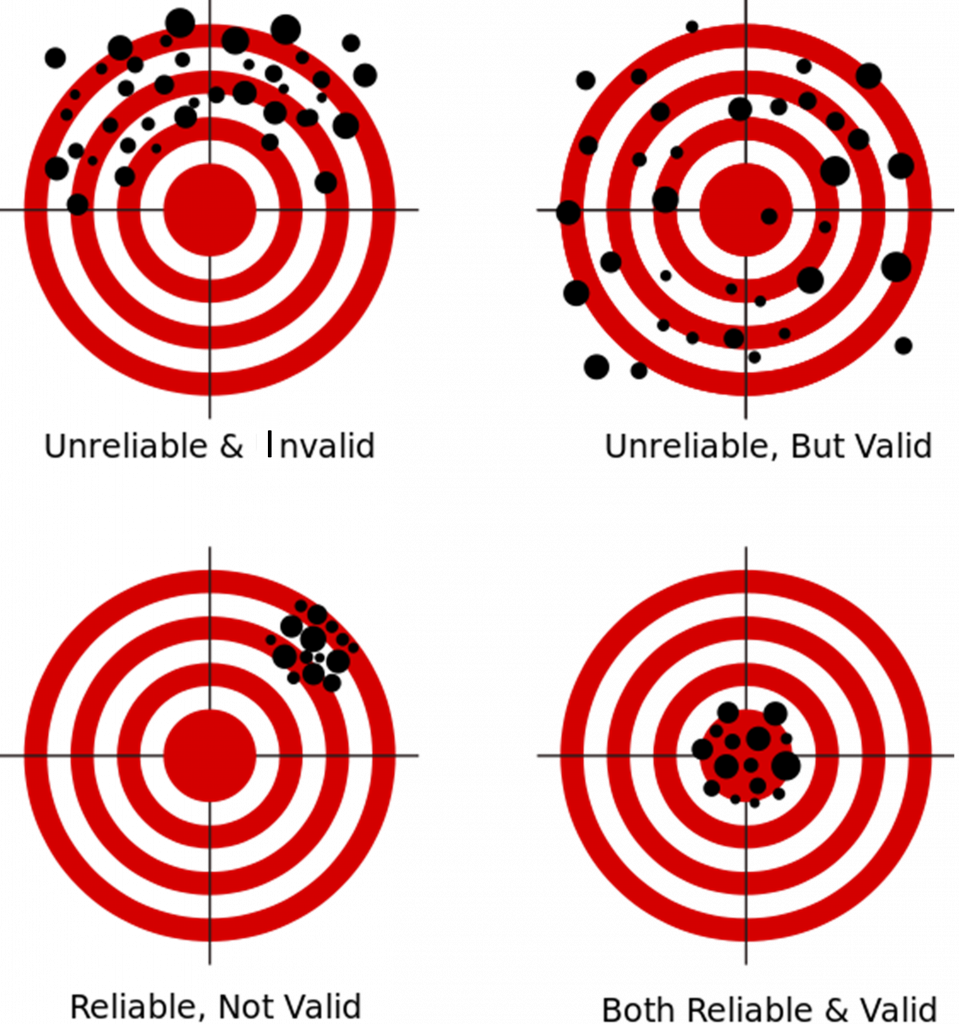

If you are still confused about validity and reliability, Figure 9.2 shows what a validity and reliability look like. On the first target, our shooter’s aim is all over the place. It is neither reliable (consistent) nor valid (accurate). The second (top right) target shows an unreliable or inconsistent shot, but one that is centered around the target (accurate). The third (bottom left) target demonstrates consistency…but it is reliably off-target, or invalid. The fourth and final target (bottom right) represents a reliable and valid result. The person is able to hit the target accurately and consistently. This is what you should aim for in your research.

Trustworthiness and authenticity

In qualitative research, the standards for measurement quality differ than quantitative research for an important reason. Measurement in quantitative research is done objectively or impartially. That is, the researcher doesn’t have much to do with it. The researcher chooses a measure, applies it, and reads the results. The extent to which the results are accurate and consistent is a problem with the measure, not the researcher.

The same cannot be said for qualitative research. Qualitative researchers are deeply involved in the data analysis process. There is no external measurement tool, like a quantitative scale. Rather, the researcher herself is the measurement instrument. Researchers build connections between different ideas that participants discuss and draft an analysis that accurately reflects the depth and complexity of what participants have said. This is a challenging task for a researcher. It involves acknowledging her own biases, either from personal experience or previous knowledge about the topic, and allowing the meaning that participants shared to emerge as the data is read. It’s not necessarily about being objective, as there is always some subjectivity in qualitative analysis, but more about the rigor with which the individual researcher engages in data analysis.

For this reason, researchers speak of rigor in more personal terms. Trustworthiness refers to the “truth value, applicability, consistency, and neutrality” of the results of a research study (Rodwell, 1998, p. 96). [3] Authenticity refers to the degree to which researchers capture the multiple perspectives and values of participants in their study and foster change across participants and systems during their analysis. Both trustworthiness and authenticity contain criteria that help a researcher gauge the rigor with which the study was conducted.

Most relevant to the discussion of validity and reliability are the trustworthiness criteria of credibility, dependability, and confirmability. Credibility refers to the degree to which the results are accurate and viewed as important and believable by participants. Qualitative researchers will often check with participants before finalizing and publishing their results to make sure participants agree with them. They may also seek out assistance from another qualitative researcher to review or audit their work. As you might expect, it’s difficult to view your own research without bias, so another set of eyes is often helpful. Unlike in quantitative research, the ultimate goal is not to find the Truth (with a capital T) using a predetermined measure, but to create a credible interpretation of the data.

Credibility is seen as akin to validity, as it mainly speaks to the accuracy of the research product. The criteria of dependability, on the other hand, is similar to reliability. As we just reviewed, reliability is the consistency of a measure. If you give the same measure each time, you should get similar results. However, qualitative research questions, hypotheses, and interview questions may change during the research process. How can one achieve reliability under such conditions?

Because emergence is built into the procedures of qualitative data analysis, there isn’t a need for everyone to get the exact same questions each time. Indeed, because qualitative research understands the importance of context, it would be impossible to control all of the things that would make a qualitative measure the same when you give it to each person. The location, timing, or even the weather can and do influence participants to respond differently. Researchers assessing dependability make sure that proper qualitative procedures were followed and that any changes that emerged during the research process are accounted for, justified, and described in the final report. Researchers should document changes to their methodology and the justification for them in a journal or log. In addition, researchers may again use another qualitative researcher to examine their logs and results to ensure dependability.

Finally, the criteria of confirmability refers to the degree to which the results reported are linked to the data obtained from participants. While it is possible that another researcher could view the same data and come up with a different analysis, confirmability ensures that a researcher’s results are actually grounded in what participants said. Another researcher should be able to read the results of your study and trace each point made back to something specific that one or more participants shared. This process is called an audit.

The criteria for trustworthiness were created as a reaction to critiques of qualitative research as unscientific (Guba, 1990). [4] They demonstrate that qualitative research is equally as rigorous as quantitative research. Subsequent scholars conceptualized the dimension of authenticity without referencing the standards of quantitative research at all. Instead, they wanted to understand the rigor of qualitative research on its own terms. What comes from acknowledging the importance of the words and meanings that people use to express their experiences?

While there are multiple criteria for authenticity, the one that is most important for undergraduate social work researchers to understand is fairness. Fairness refers to the degree to which “different constructions, perspectives, and positions are not only allowed to emerge, but are also seriously considered for merit and worth” (Rodwell, 1998, p. 107). Qualitative researchers, depending on their design, may involve participants in the data analysis process, try to equalize power dynamics among participants, and help negotiate consensus on the final interpretation of the data. As you can see from the talk of power dynamics and consensus-building, authenticity attends to the social justice elements of social work research.

After fairness, the criteria for authenticity become more radical, focusing on transforming individuals and systems examined in the study. For our purposes, it is important for you to know that qualitative research and measurement are conducted with the same degree of rigor as quantitative research. The standards may be different, but they speak to the goals of accurate and consistent results that reflect the views of the participants in the study.

Key Takeaways

- Reliability is a matter of consistency.

- Validity is a matter of accuracy.

- There are many types of validity and reliability.

- The criteria that qualitative researchers use to assess rigor are trustworthiness and authenticity.

- Quantitative research is not inherently more rigorous than qualitative research. Both are equally rigorous, though the standards for assessing rigor differ between the two.

Glossary

- Authenticity- the degree to which researchers capture the multiple perspectives and values of participants in their study and foster change across participants and systems during their analysis

- Concurrent validity- if a measure is able to predict outcomes from an established measure given at the same time

- Confirmability- the degree to which the results reported are linked to the data obtained from participants

- Content validity- if the measure includes all of the possible meanings of the concept

- Convergent validity- if a measure is conceptually similar to an existing measure of the same concept

- Credibility- the degree to which the results are accurate and viewed as important and believable by participants

- Dependability- ensures that proper qualitative procedures were followed and that any changes that emerged during the research process are accounted for, justified, and described in the final report

- Discriminant validity- if a measure is not related to measures to which it shouldn’t be statistically correlated

- Face validity- if it is plausible that the measure measures what it intends to

- Fairness- the degree to which “different constructions, perspectives, and positions are not only allowed to emerge, but are also seriously considered for merit and worth” (Rodwell, 1998, p. 107)

- Internal consistency reliability- degree to which scores on each question of a scale are correlated with each other

- Inter-rater reliability- the degree to which different observers agree on what happened

- Predictive validity- if a measure predicts things it should be able to predict in the future

- Reliability- a measure’s consistency.

- Test-retest reliability- if a measure is given multiple times, the results will be consistent each time

- Trustworthiness- the “truth value, applicability, consistency, and neutrality” of the results of a research study (Rodwell, 1998, p. 96)

- Validity- a measure’s accuracy

- Of course, if our interest is in how many research participants perceive themselves to have a problem, then our measure may be just fine. ↵

- Figure 9.2 was adapted from Nevit Dilmen’s “Reliability and validity” (2012) Shared under a CC-BY 3.0 license Retrieved from: https://commons.wikimedia.org/wiki/File:Reliability_and_validity.svg I changed the word unvalid to invalid to reflect more commonly used language. ↵

- Rodwell, M. K. (1998). Social work constructivist research. New York, NY: Garland Publishing. ↵

- Guba, E. G. (1990). The paradigm dialog. Newbury Park, CA: Sage Publications. ↵